AMD Instinct™ MI300 Series Accelerators

Leadership Generative AI GPUs and APUs

Enhancing AI and HPC Capabilities

The AMD Instinct™ MI300 Series accelerators including the MI300X and MI300A, are optimally designed to handle the most challenging AI and HPC workloads. They provide outstanding computing power, substantial memory capacity, high-speed memory bandwidth, and compatibility with specialized data formats.

AMAX MI300 Series Accelerators

AceleMax DGS-424AI

3U with 4x AMD Instinct MI300A Accelerators and 4x FHFL PCIe slot for additional GPU

| APUs | 4x AMD MI300A |

| Memory | 512 GB HBM3 |

| Storage | 8x 2.5″ hot-swap NVMe drive bays |

AceleMax DGS-428A

8U air cool with 8x AMD Instinct MI300X Accelerators

| CPUs | Dual AMD EPYC 9004 |

| GPUs | 8x AMD MI300X |

| Memory | 6TB 4800MT/s DDR5 |

| Storage | 18x 2.5″ hot-swap NVMe drives |

AMD Instinct MI300X Platform

The AMD Instinct MI300X Platform integrates eight MI300X GPU OAM modules using 4th-Gen AMD Infinity Fabric™ links into a standard OCP design. It offers up to 1.5TB HBM3 capacity, enabling low-latency AI processing. This plug-and-play platform streamlines market deployment and minimizes development expenses for incorporating MI300X accelerators into current AI rack and server setups.

304 CUs

304 GPU Compute Units

192 GB

192 GB HBM3 Memory

5.3 TB/s

5.3 TB/s Peak Theoretical Memory Bandwidth

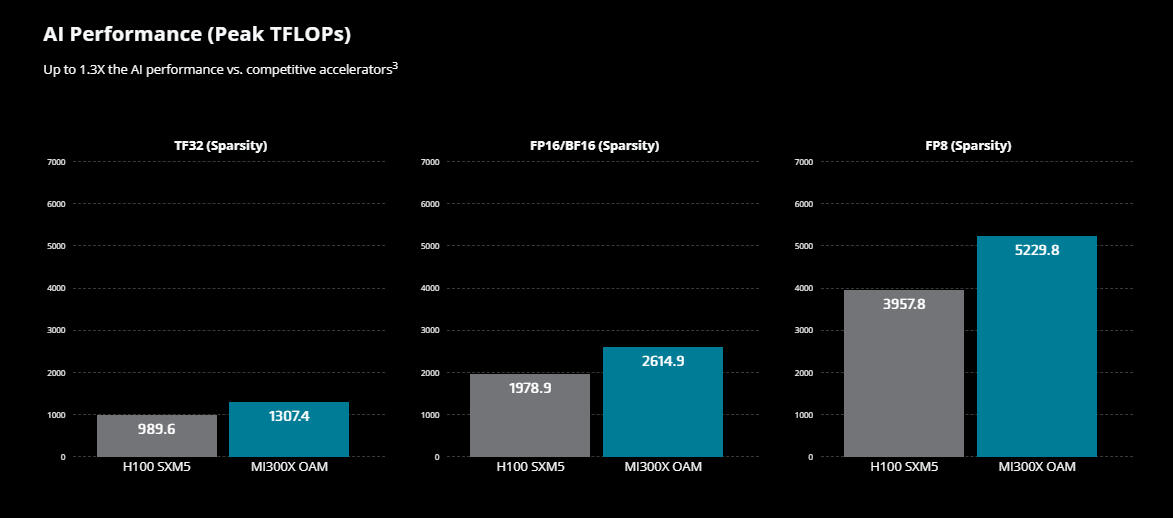

Outpacing H100 AI Performance

Experience next-generation AI capabilities with the AMD Instinct MI300X. Outperforming NVIDIA's H100, the MI300X boasts superior memory capacity and bandwidth, essential for complex AI models and data-intensive tasks. With up to 1.5TB of HBM3 memory and enhanced compute performance, the MI300X is designed for efficiency in AI computations, offering a significant advantage in memory-bound applications like Large Language Models (LLMs). Embrace the future of AI processing with AMD's advanced technology, setting new benchmarks in AI acceleration.

Graph courtesy of AMD

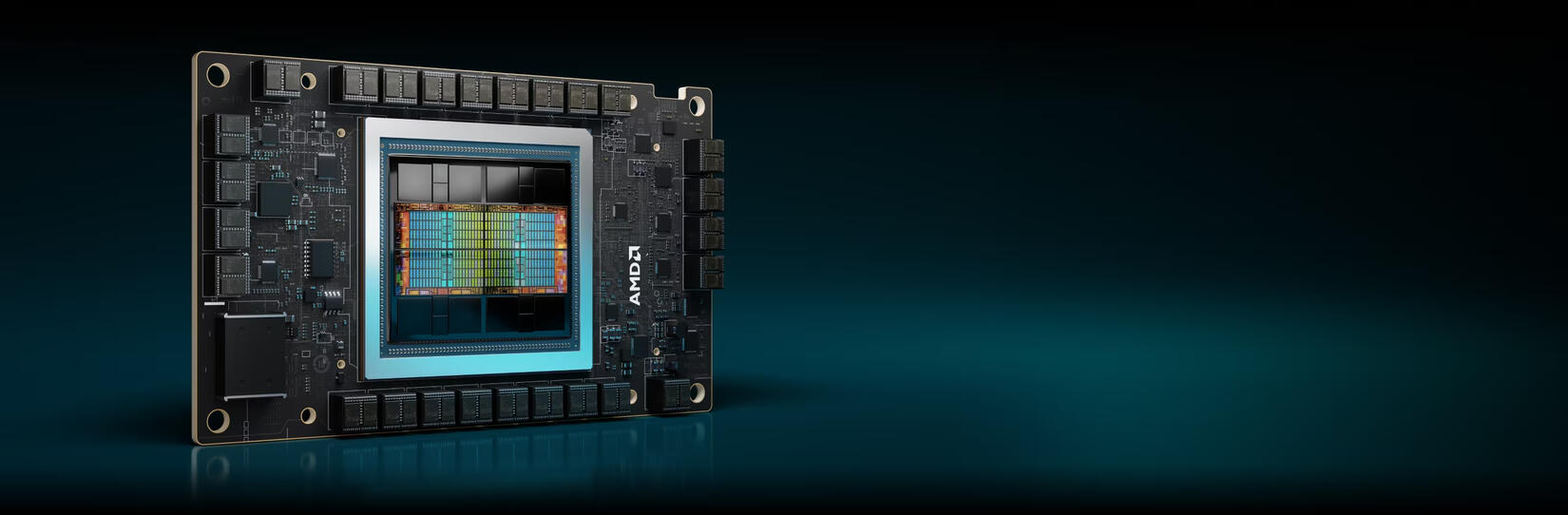

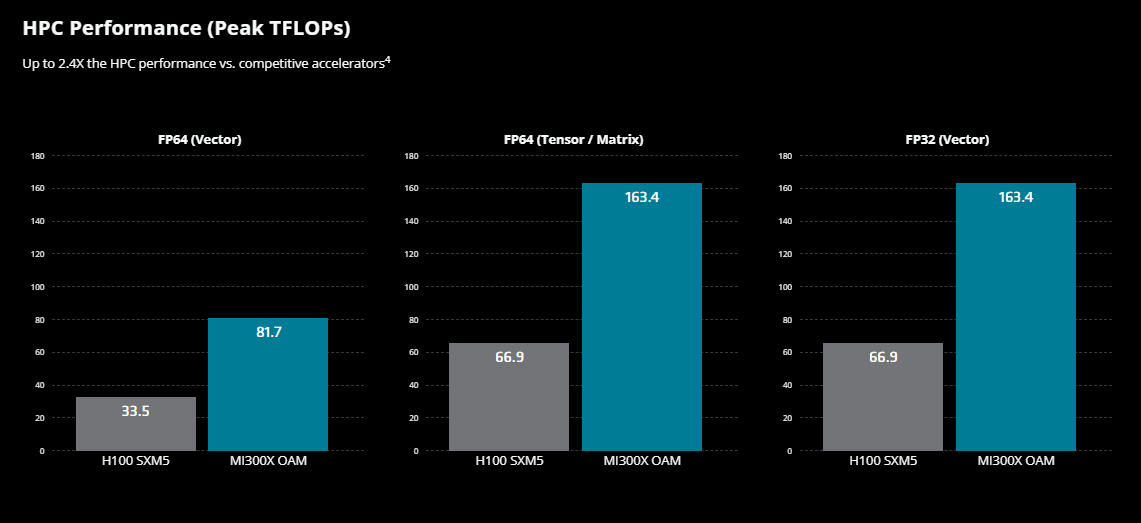

Accelerating HPC Applications

The AMD Instinct MI300 Series accelerators, powered by the AMD CDNA™ 3 architecture, include Matrix Core Technologies and support a diverse range of precision capabilities. These accelerators excel from INT8 and FP8, with sparsity support for AI, to FP64 for more demanding tasks, significantly accelerating high-performance computing (HPC) applications with their advanced computational abilities.

Graph courtesy of AMD